From Code to Prompts: Rethinking Version Control for AI

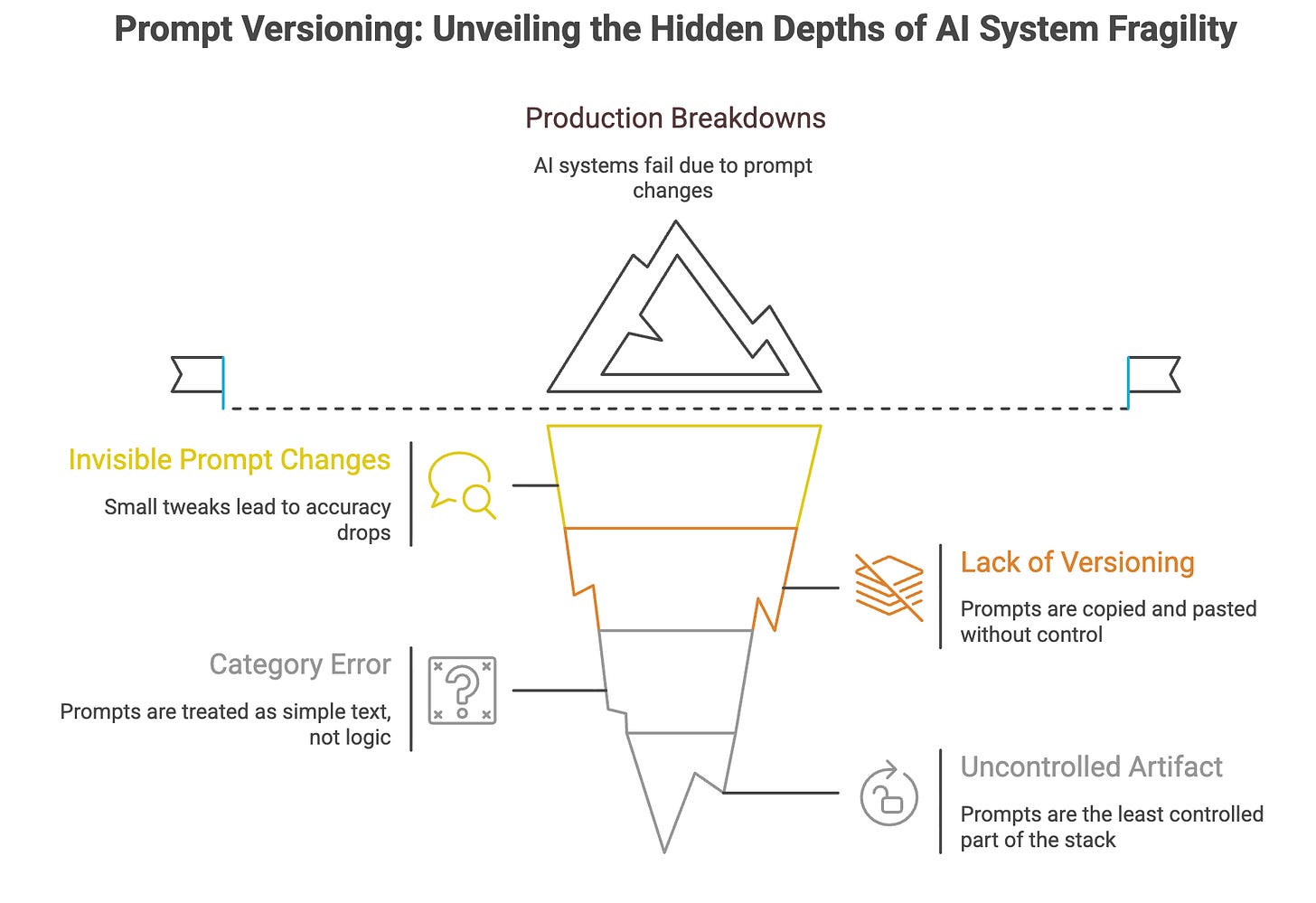

What breaks when prompts aren’t managed

Most AI teams don’t break production with bad models.

They break it with invisible prompt changes.

Someone tweaks a sentence.

Accuracy drops.

No one knows why.

That’s not an AI problem.

That’s a DevOps failure.

AI teams version code.

Their version of infrastructure.

They version data.

But the most critical logic in the system - the prompt - is often copied, pasted, and “improved” with zero discipline.

That’s why AI systems feel fragile.

And why teams can’t explain regressions.

This post is about prompt versioning - what it is, why it’s mandatory, and how serious AI teams actually do it.

Why Prompts Are Not “Just Text”

Most teams treat prompts as:

strings

configuration

glue code

That is a category error.

A prompt defines:

system behavior

reasoning style

safety boundaries

output structure

failure modes

In AI systems, prompts are executable logic.

Changing a prompt is closer to changing:

a pricing rule

a security policy

a business workflow

Then editing the copy.

And yet prompts are often the least controlled artifact in the stack.

The Real Reason AI Systems Feel Unstable

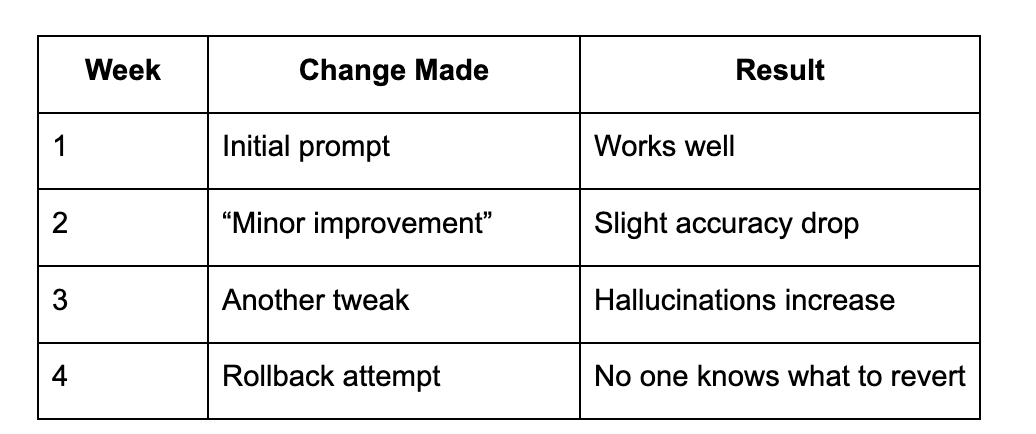

Here’s the pattern every AI team experiences:

No model change.

No infra change.

No data change.

Only prompt drift.

Without versioning:

regressions are invisible

experiments are irreversible

debugging becomes guesswork

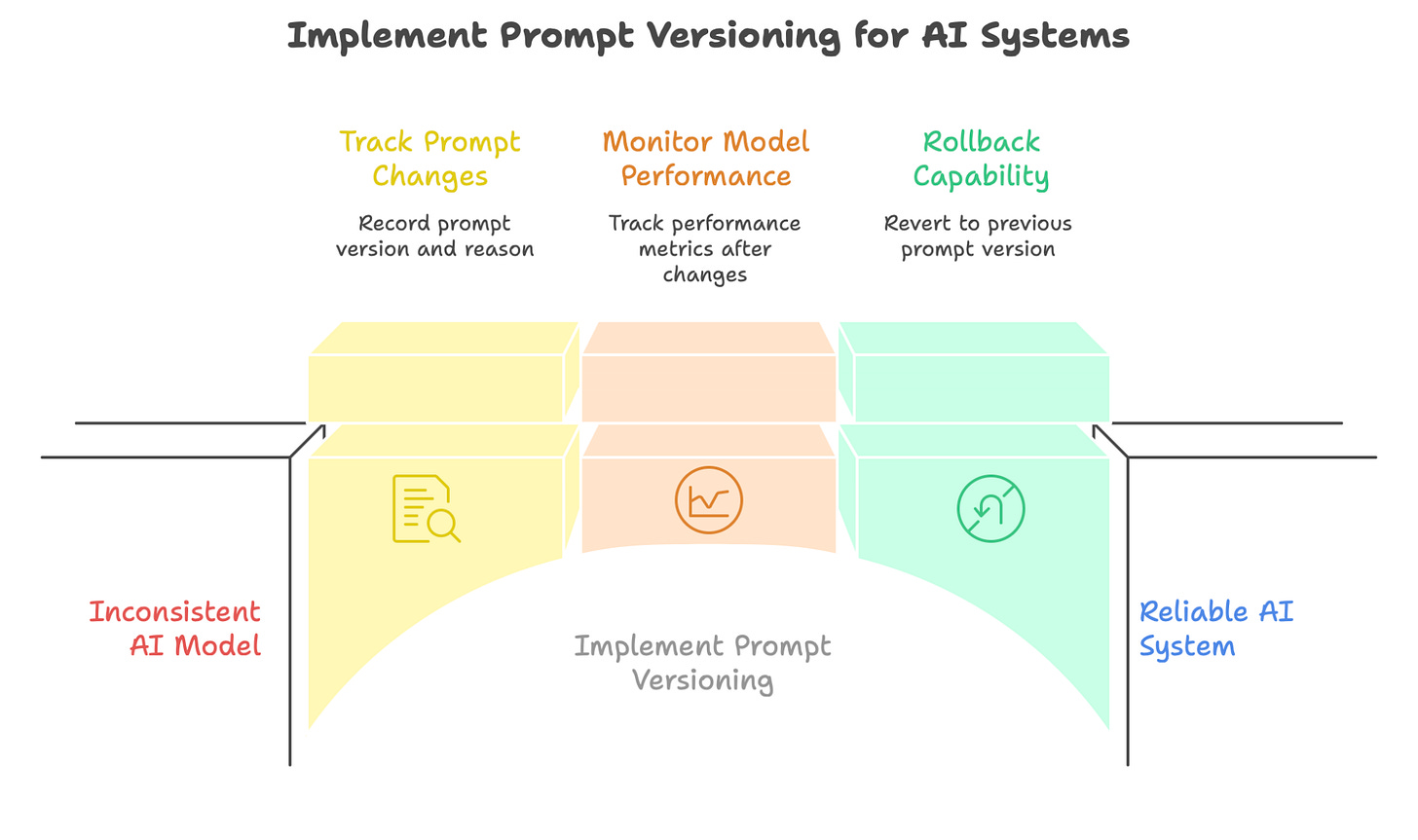

What Prompt Versioning Actually Means

Prompt versioning is not:

adding comments

saving prompts in Notion

naming files prompt_v2_final_final.txt

Prompt versioning means:

Treating prompts as first-class, versioned, testable, rollbackable artifacts — just like code.

That requires:

structure

diffs

ownership

evaluation

release discipline

The Anatomy of a Production Prompt (What Teams Miss)

A real prompt is not a paragraph.

It’s a composite system.

Example: Structured Prompt Layout

prompt_id: invoice_summarizer

version: v1.3.2

owner: payments_team

purpose: Summarize invoice data accurately

model: gpt-4.1

[System]

You are a financial analysis assistant.

[Constraints]

- Do not invent numbers

- Use retrieved data only

- If data is missing, say “Not available”

[Reasoning]

Think step-by-step before answering.

[Output Format]

{

“summary”: “”,

“total_amount”: 0,

“risk_flags”: []

}

[Failure Behavior]

If confidence < 0.7, ask for clarification.

Every section has behavioral implications.

Without structure, versioning is meaningless.

Why Small Prompt Changes Cause Big Failures

Prompts interact with models non-linearly.

Example changes that cause regressions:

moving a constraint lower in the prompt

adding “be concise”

changing output order

adding one example

modifying tone instructions

Example: Seemingly Harmless Edit

- “Do not invent numbers”

+ “Avoid inventing numbers”

Result:

hallucination rate ↑

confidence ↑

detection ↓

Without versioning + evals, this looks like “model randomness.”

It’s not.

It’s an untracked logic change.

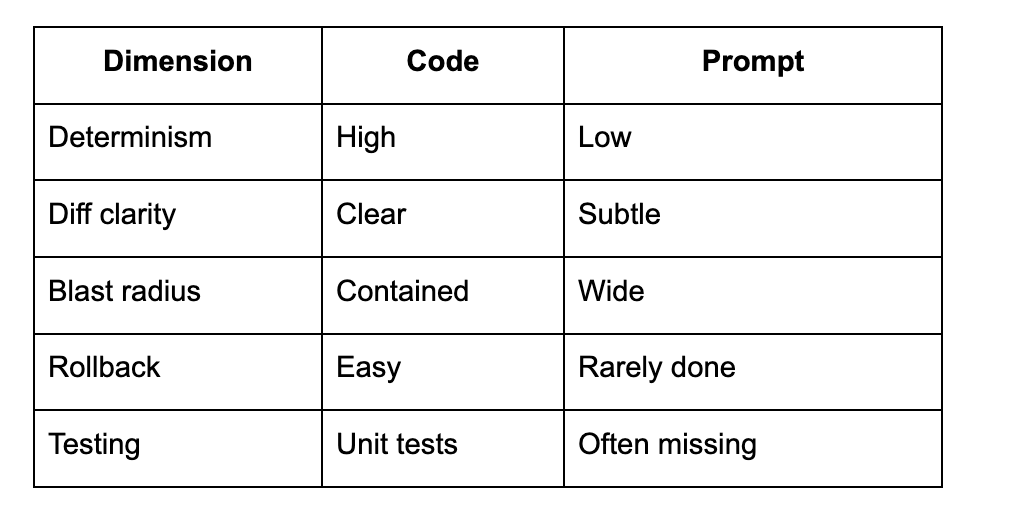

Prompt Versioning vs Code Versioning

This makes versioning prompts more important than code, not less.

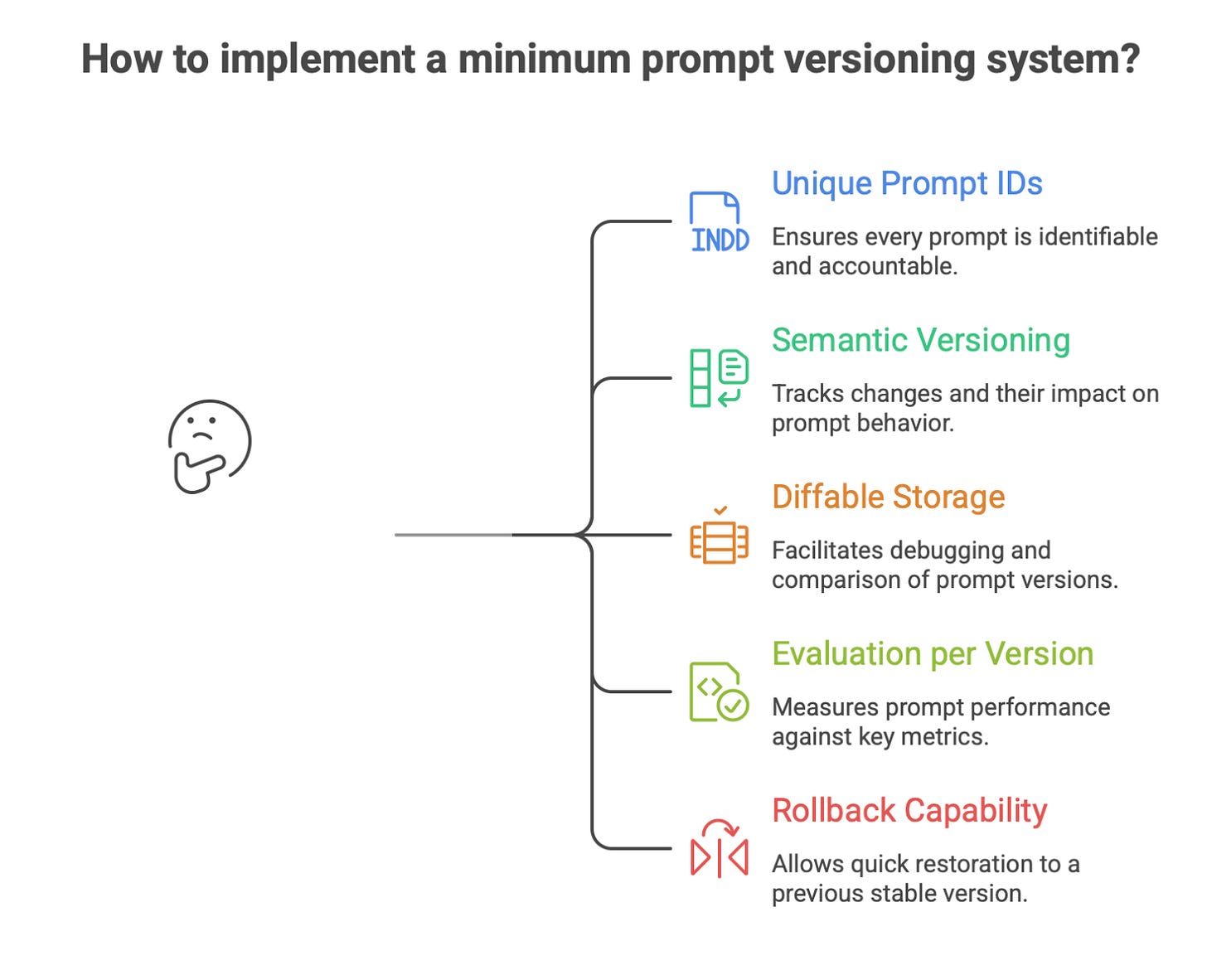

The Minimum Prompt Versioning System

You don’t need complex tooling.

You need discipline.

1 Unique Prompt IDs

Every prompt must have:

a name

an owner

a purpose

No anonymous prompts.

2 Semantic Versioning

Use real versions:

v1.0.0 - baseline

v1.1.0 - behavior change

v1.1.1 - formatting fix

If behavior changes, minor versions must change.

3 Diffable Storage

Prompts must live where diffs are visible:

Git

prompt registries

config repos

If you can’t diff it, you can’t debug it.

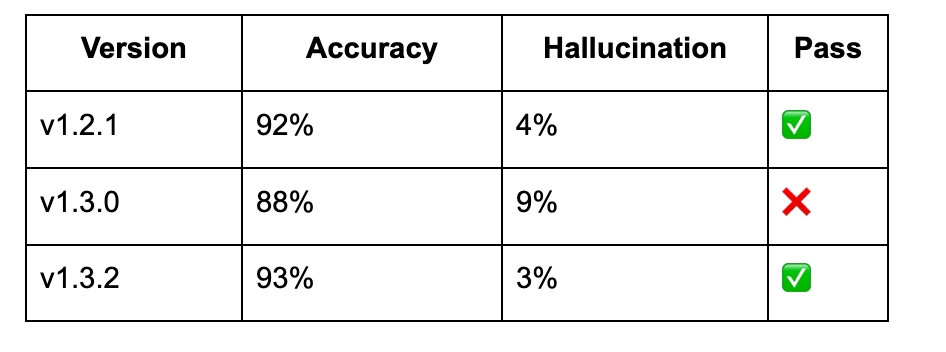

4 Evaluation per Version

Every prompt version must be evaluated against:

baseline accuracy

hallucination rate

refusal behavior

Example eval table:

No eval = no release.

5 Rollback Capability

Prompt rollback should be:

one config change

not a rewrite

not a guess

If rollback takes more than minutes,

your system is fragile.

Prompt Versioning in Multi-Agent Systems

In agentic systems:

prompts interact

reasoning compounds

failures cascade

A single prompt change in:

planner

executor

evaluator

can destabilize the entire system.

Without versioning:

root cause analysis becomes impossible

agent behavior feels “random”

trust collapses internally

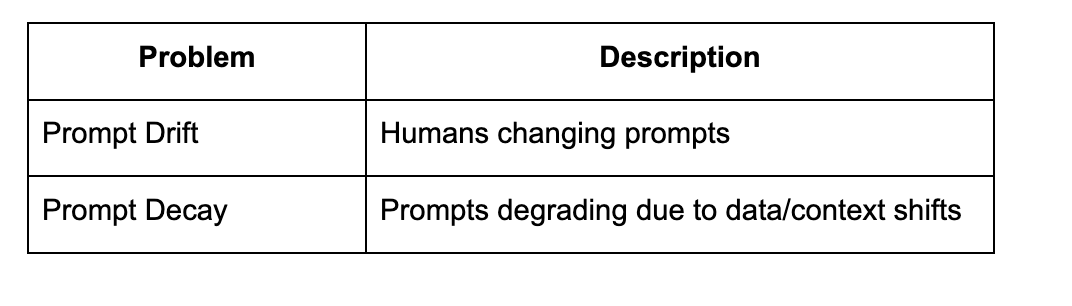

Prompt Drift vs Prompt Decay

Two different problems teams confuse:

Versioning helps detect drift.

Evals + monitoring help detect decay.

You need both.

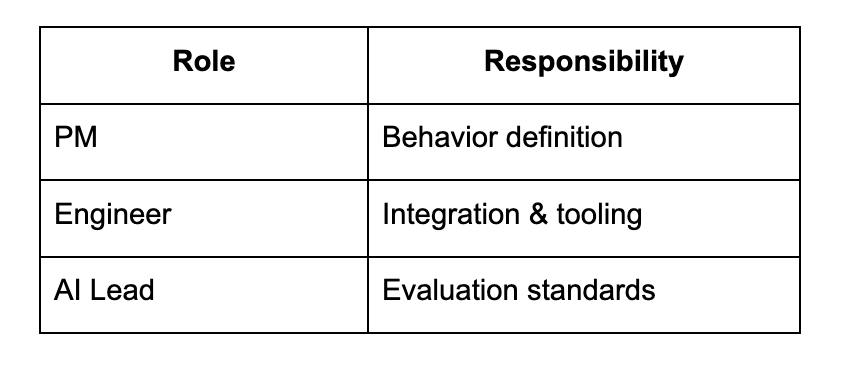

Who Should Own Prompt Versioning?

Not:

“whoever touched it last”

Ownership must be explicit.

Prompt versioning is cross-functional, like APIs.

Why This Practice Separates Mature Teams From Everyone Else

Immature AI teams say:

“The model is inconsistent.”

Mature teams say:

“Prompt v1.4.0 regressed faithfulness by 6%.”

That difference is everything.

Because the second team:

knows what broke

knows when it broke

knows how to fix it

The first team debates opinions.

AI systems don’t become unreliable because models are unpredictable.

They become unreliable because logic changes are invisible.

Prompts are logic.

And logic without versioning is technical debt.

If your team cannot answer:

which prompt version is live

why it was changed

what behavior it altered

how to roll it back

Then you don’t have an AI system.

You have a demo held together by hope.

Prompt versioning is not a nice-to-have.

It is the missing DevOps practice in AI teams.

And teams that adopt it early

will ship faster, safer, and with far less drama.