What is MCP? (Model Context Protocol)

The Universal Protocol That's Transforming AI from Smart Chatbots into Capable Assistants.

Don’t you think? Chatbots like Claude, ChatGPT, and Gemini are incredibly smart but frustratingly isolated. They can't access your files, connect to databases, or pull real-time information.

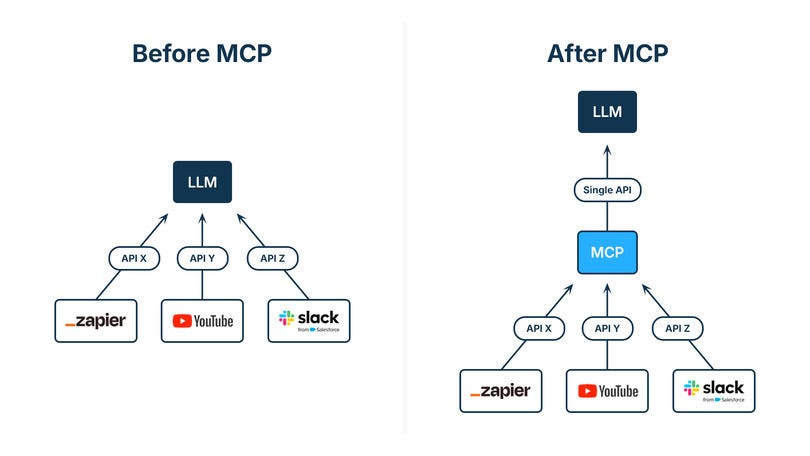

The Solution: Model Context Protocol (MCP) - think universal USB for AI connections.

MCP is an open protocol providing a standard way for applications to define context for LLMs. You can think of MCP as the USB-C port for AIs. Just like USB-C provides a way to standardize how your devices connect to all different peripherals and accessories, MCP provides a way to standardize how AIs connect to all different data and tools.

It's an open-source protocol specifically built to securely and safely link AI tools (like assistant agents) to your data sources such as your CRM in the company, Slack workspace, or a dev server. In short, it means your AI assistant can recognize relevant data and execute actions in those tools like pushing a record update, sending messages, or deploying code. MCP allows AI assistants to both recognize and execute actions, thus enabling more meaningful, context-aware, and proactive AI experiences.

Originally developed at Anthropic (the company behind Claude), the MCP integration has now been adopted not only by OpenAI, but also a number of other AI platforms such as Replit, Sourcegraph and Windsurf.

This blog breaks down how the Model Context Protocol actually works under the hood and what it can do for you.

We'll cover how it tackles the tricky problems that come up when trying to get AI to play nice with other software, plus give you practical steps to start building AI apps that do way more than just basic back-and-forth conversations.

What problem is MCP solving?

Traditional AI excels at answering basic questions based on its training data. But what happens when you need it to understand your unique business, analyze real-time data, or even perform tasks within your existing systems?

This is where standard AI falls short. It struggles with:

Specific Business Context: Knowing your latest sales figures, competitor strategies, or even your boss's email.

Accessing Live Data: Connecting to your internal systems for up-to-the-minute information.

Taking Action: Moving beyond insights to actually execute tasks like sending reports, updating databases, or communicating with your team.

MCP (a common language for AI tools) bridges this gap. It acts as a universal translator, enabling AI to:

Access and understand information from your actual business systems.

Leverage features from other applications to perform real-world tasks.

With MCP, AI isn't just about smart answers; it's about intelligent action. It empowers AI to "roll up its sleeves and get the work done," transforming insights into tangible results.

MCP architecture and core components

The Model Context Protocol (MCP) operates on a straightforward client-server model. It draws inspiration from the Language Server Protocol (LSP), which standardizes communication between programming languages and development tools.

Similarly, MCP provides a common framework for AI applications to:

Connect with external systems.

Facilitate organized information exchange.

In essence, MCP creates a standardized language, allowing AI to seamlessly integrate and interact with a diverse range of outside platforms.

Core Components:

Want to see how MCP helps AI do more?

It's all about its core design. The Model Context Protocol (MCP) uses a smart, straightforward system. Its key parts work together perfectly, connecting AI tools to outside systems and data with ease. This means your AI apps won't just understand information; they'll actually interact with the world around them, making things happen.

MCP architecture consists of four primary elements:

Host application: These are the AI tools that you actually chat with and that kick off connections to other systems. Think Claude Desktop, coding assistants like Cursor, or regular AI chat websites.

MCP client: This sits inside your AI app and handles all the behind-the-scenes work of connecting to MCP servers. It's like a translator that helps your AI app understand what it's getting from outside sources. For example, Claude Desktop has one of these built right in.

MCP server: These add new abilities and information to your AI by connecting it to specific tools or data sources. Each server usually focuses on one thing - like connecting to GitHub for code stuff or hooking up to a database for storing information.

Transport layer: This is just how the client and server actually talk to each other. MCP has two main ways to do this:

STDIO: Used when everything runs on the same computer - the server and client are basically neighbors.

HTTP+SSE: For when the server is somewhere else on the internet - uses regular web requests to send stuff and streaming to get responses back.

All communication in MCP uses JSON-RPC 2.0 as the underlying message standard, providing a standardized structure for requests, responses, and notifications.

How does MCP work?

When you're using an AI app that works with MCP, there's actually quite a bit happening behind the scenes to make everything work smoothly between the AI and other programs. Let's walk through what's really going on when you ask Claude Desktop to do something that needs to reach outside of just the chat.

Protocol handshake

Initial connection: When your AI app (like Claude Desktop) fires up, it automatically reaches out to connect with any MCP servers you have set up on your computer.

Capability discovery: Your AI app basically asks each server "Hey, what can you do for me?" and the servers reply back with a list of all their features, data sources, and ready-made templates.

Registration: Your AI app takes note of all these capabilities and makes them ready to use whenever you need them in your conversations.

From user requests to external data

Let's say you ask Claude, "What's the weather like in Delhi today?" Here's the step-by-step breakdown of what actually happens:

Need recognition: Claude looks at your question and realizes you're asking for current information that it doesn't already know from when it was trained.

Tool or resource selection: Claude figures out which external connection it needs to use to get you this fresh information.

Permission request: Your AI app shows you a quick message asking if it's alright to connect to that outside service.

Information exchange: Once you give the green light, your app sends the request to the right server using MCP's standard format.

External processing: The server takes your request and does whatever it needs to do - maybe checking a weather website, reading a file, or looking up something in a database.

Result return: The server packages up the information and sends it back in a format that your AI can easily understand.

Context integration: Claude takes this fresh information and weaves it into your ongoing conversation.

Response generation: Claude crafts a response that includes real-time information, so you get an accurate, up-to-date answer.

When everything works smoothly, this whole dance happens in just a few seconds, making it feel like Claude just magically knows current information that it obviously couldn't have learned during its original training.

Benefits of implementing MCP

Simplified development: Create something once and use it with different AI tools without having to rebuild everything from scratch each time.

Flexibility: Want to try a different AI model or switch tools? No problem - you won't need to rebuild your whole setup.

Real-time responsiveness: Your connections stay live, so your AI can get fresh information and respond to changes as they happen.

Security and compliance: Has built-in safety features and follows standard security practices so you don't have to worry about protecting your data.

Scalability: Need to add new features? Just plug in another server and you're good to go - no major overhauls needed.

Examples of MCP servers

The MCP ecosystem comprises a diverse range of servers including reference servers (created by the protocol maintainers as implementation examples), official integrations (maintained by companies for their platforms), and community servers (developed by independent contributors).

Reference servers

These servers show off what MCP can do and give other developers a blueprint for building their own. The MCP team maintains these basic connections that include must-have integrations like:

PostgreSQL: Let your AI talk to PostgreSQL databases and run search queries (but can't change anything). This is a great example of how you can give AI access to information while keeping it safe - it can look but can't touch.

Slack: Your AI can read and send messages in Slack, add emoji reactions, look through old conversations, and do other Slack stuff. Pretty straightforward, but it shows how MCP can pull information from all sorts of places, including the chat apps your team already uses.

GitHub: Does a bunch of different things like automating code tasks, analyzing project data, and even helping build AI-powered apps. The first version was pretty basic, but the current GitHub connection is a solid example of how AI can work with external websites and services.

These examples show MCP's potential, but building your own MCP-powered AI agents requires understanding both the protocol and agent architecture. That's exactly what we cover in our Advanced AI Course - from MCP fundamentals to creating sophisticated agents that can interact with any system.

Official MCP integrations

These servers are officially supported by the companies who own the tools. Integrations like these are production-ready connectors available for immediate use.

Stripe: Handles payment stuff through plain English - you can ask it to create invoices, add new customers, or process refunds just by talking to it normally. This is perfect for businesses that want their customer service chatbots to handle billing questions without human help.

JetBrains: Brings AI help right into coding environments that developers already use. You can ask it to look through your code files, set debugging points, or run terminal commands just by describing what you want in regular language.

Apify: Gives you access to over 4,000 different web tools that can do things like browse websites intelligently, scrape data from various platforms, and crawl through content. It's like having a Swiss Army knife for gathering information from the internet.

Conclusion:

What it is: MCP is like a universal connector that lets AI chat with any app or database - no more building custom bridges every time.

Why it matters:

For users: Your AI can now access real-time info and actually do things (send emails, update databases, check the weather)

For developers: Build once, use everywhere - no more starting from scratch for each AI integration

For businesses: AI that actually works with your existing tools instead of living in isolation

The bottom line: MCP transforms AI from a smart chatbot into a capable assistant that can connect to your real-world data and get actual work done. It's open-source, backed by major companies, and becoming the standard way AI talks to other software.

Think of it this way: Before MCP, AI was like having a brilliant consultant who could only work with whatever papers you handed them. Now it's like giving that consultant access to your entire office, your databases, your tools, and the internet - and they can actually take action on what they learn.

Resources to Dive Deeper:

Introducing the Model Context Protocol by Anthropic.

Model Context Protocol on GitHub.

Collection of Servers for MCP on GitHub.

Building Agents with Model Context Protocol (and especially the part: What’s next for MCP) by Mahesh Murag from Anthropic, Workshop @AI Engineering Summit.

MCP Guardian on Github.

Advanced AI Agents Course - Complete hands-on training covering both AI agent development and MCP integration from basics to production deployment.