What is Neural Network & How is it Works ?

Discover how neural networks power Netflix, Spotify & your phone. Complete guide to AI types, applications & real-world examples. Learn how AI shapes your daily life.

What if I told you that right now, as you read this, thousands of artificial brains are making decisions about your life and you have no idea they exist?

You interact with AI more than your best friend, yet you couldn't explain how it works if your life depended on it. Your phone recognizes your face, Netflix knows your taste, and your car avoids traffic you haven't seen.

It's not magic. It's Neural Networks, and they're the invisible puppeteers behind your digital life.

Introduction to Neural Networks

A Neural Network is a type of Machine Learning model that draws inspiration from the information processing mechanisms of the human brain. It is made up of layers of interconnected units called neurons that collaborate to identify patterns and connections in data.

These networks are perfect for tasks like image recognition, speech processing, and natural language understanding because they can spot intricate patterns that conventional algorithms might overlook.

The biological neural networks found in the brain, where neurons interact to process information, served as an inspiration for the design of neural networks. In a similar vein, artificial neural networks learn from input data and modify themselves to increase accuracy.

Evolution of Neural Network Machine Learning

Let us mark some of the important years in the evolution of neural network machine learning.

1795 – Method of Least Squares introduced for linear regression to predict planetary movements.

1805 – Formal publication of the Least Squares method by Legendre and Gauss.

1943 – McCulloch & Pitts propose the first computational model of neural networks (non-learning).

1949 – Hebbian learning rule introduced: “neurons that fire together, wire together.”

1954 – Machines simulate Hebbian networks.

1956 – More neural network computational models created during the Dartmouth conference.

1958 – Perceptron model by Frank Rosenblatt: first learning algorithm for binary classification.

1960 – Introduction of multilayer perceptrons (MLPs) with adaptive hidden layers.

1965 – First deep learning algorithm: Group Method of Data Handling (GMDH) by Ivakhnenko.

1967 – MLPs trained using stochastic gradient descent for the first time.

1969 – ReLU (Rectified Linear Unit) activation function proposed.

1969 – Minsky and Papert demonstrate that single-layer perceptrons can't solve the XOR problem, halting progress for over a decade.

1971 – Description of an 8-layer deep network trained using regression techniques.

1979 – Introduction of the Neocognitron, a precursor to convolutional neural networks (CNNs), but without backpropagation.

How Does a Neural Network Work?

According to Arthur Samuel, one of the early American pioneers in the field of computer gaming and artificial intelligence, he defined machine learning as:

Example: Let’s say we rule out an automatic way to determine the effectiveness of any current weight assignment by examining actual performance and let’s say there is a way to alter the weight assignment to maximize performance. We don’t need to consider exactly how such a procedure would work to see that it could be fully done automatically, and that a machine programmed in this way would effectively “learn” during its experience.

Working Explained

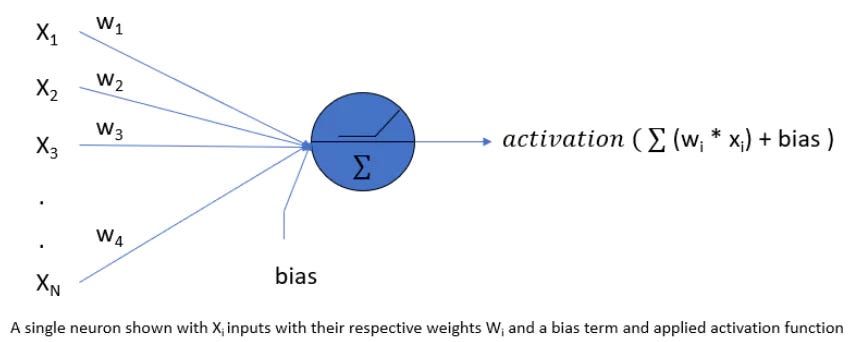

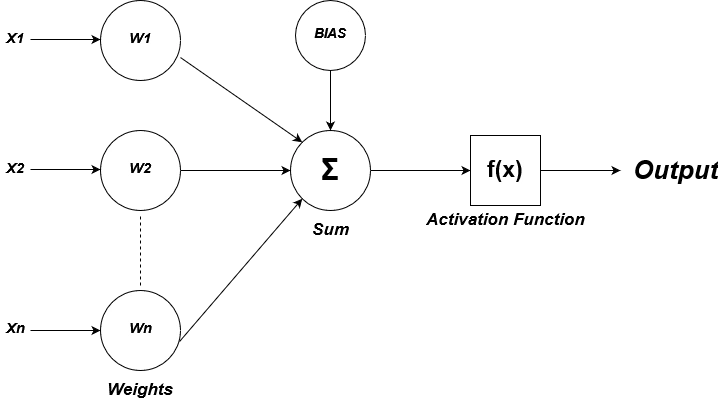

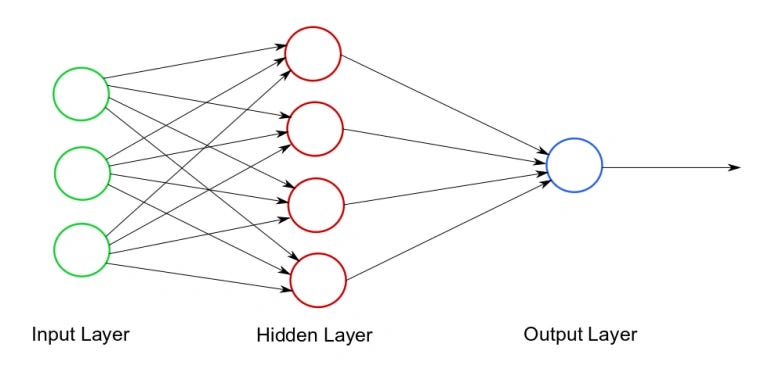

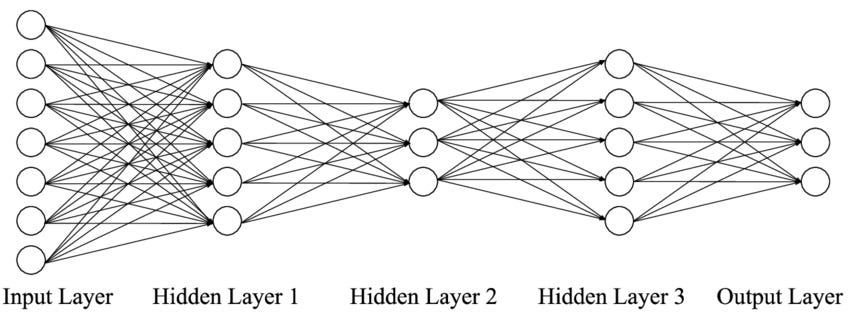

We can regard an artificial neuron as a single or multiple linear regression model with an activation function at the end. A neuron from layer i will receive outputs from all neurons from layer i-1 as inputs, compute a weighted sum and apply bias. This is then sent to an activation function as we saw in the previous figure above.

The first neuron in the first layer takes inputs from whole previous layer. The second neuron in the first hidden layer takes inputs from whole previous layer. And the same applies for all neurons in the first hidden layer.

Each layer feeds its results into the next layer, creating a chain of connected neurons. This flow of information from input to output is called forward propagation

How Neural Networks Learn?

Forward Propagation: During this stage, the network processes a relevant input data item through the network layer-by-layer:

Input Layer: Data comes into the network, in the form of an image or text, for example.

Hidden Layers: Each neuron in the hidden layers takes the previously derived results from the previous layer, performs a calculation using Weights, a Bias, and an Activation function, then passes the results to the next layer.

Output Layer: The last layer produces the final output, for example a prediction or classification (e.g. the image is a dog or a cat).

Example: If you are feeding in an image of a cat. The network processes the features of this image, like edges, shapes, and colors in the hidden layers, and eventually decides that the image is a "cat"

Backpropagation: To assess prediction accuracy after forward propagation, the network checks how close its guess was to the right answer.

The backpropagation process can be broken down into the following steps:

Error Calculation: The neural network uses a loss function to measure the actual prediction error relative to the target output, or expected output.

Weight Adjustment: The neural network back propogates the errors by calculating the figures out which connections need adjusting. The weights in each section of the neural network are then updated accordingly.

Iteration: The backpropagation process loops through the above steps for a many rounds of practice. During the update, the model is improved by adjusting the weights until the actual output (error) is minimized.

Backpropagation ensures that the network learns effectively from mistakes and continuously improves its predictions.

Types of Neural Networks

Think of neural networks as different types of brains, each one specially built to solve different kinds of problems. Just like how your brain has different areas that handle vision, memory, and speech, these artificial brains are designed with specific strengths.

1. Feedforward Neural Network (FNN)

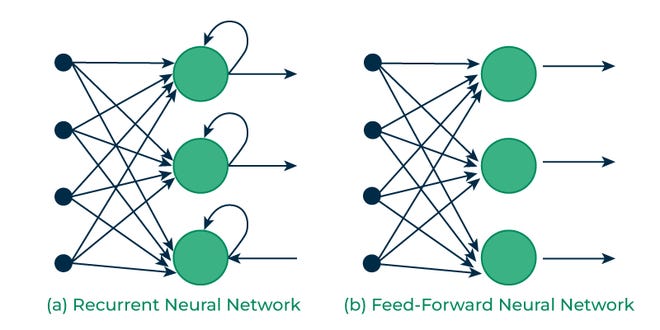

Overview: This is the simplest type of artificial brain where information flows in just one direction - like water flowing down a hill. There's no going back or circling around, just straight from start to finish.

How It Works: Picture information entering at one end, getting processed by each layer one after another, and coming out transformed at the other end. Each layer takes what the previous layer learned and adds its own understanding before passing it along.

Applications: This straightforward approach works great for basic decision-making tasks. You might use it to teach a computer whether a handwritten number is a 3 or an 8, or to help a system decide between simple yes-or-no answers.

2. Multilayer Perceptron (MLP)

Structure: Think of this as having multiple teams of experts, each team specializing in spotting different patterns. It's like the feedforward network's smarter cousin with several hidden layers that can dig deeper into complex problems.

How It Works: Each hidden layer acts like a detective, uncovering clues and patterns that weren't obvious at first glance. The more layers you have, the more sophisticated patterns the network can recognize and understand.

Applications: This deeper thinking power makes it perfect for trickier jobs like figuring out if a customer review is positive or negative, detecting credit card fraud, or predicting whether a company's stock will perform well.

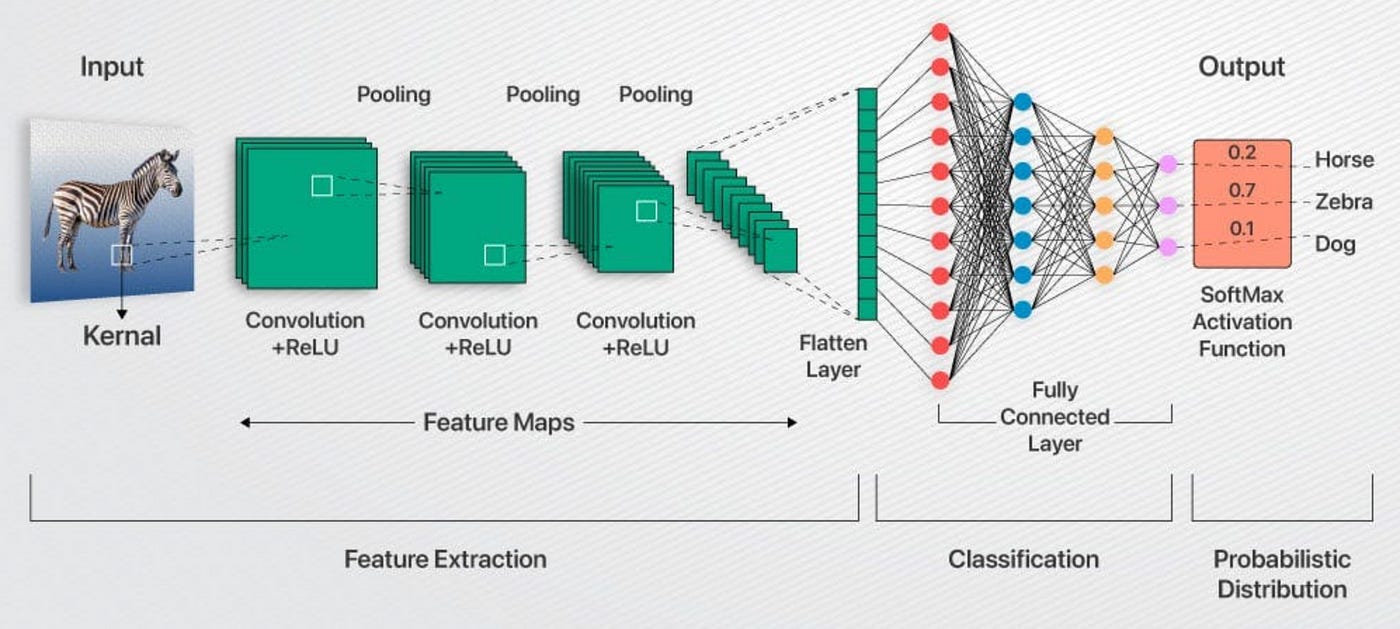

3. Convolutional Neural Network (CNN)

Overview: It's specifically designed to understand images and videos by looking for patterns like edges, shapes, and textures - much like how your eyes naturally scan a photo.

Key Feature: It includes special layers that can shrink down complex images while keeping the important details intact. Think of it like creating a really good summary of a long book - shorter, but with all the key points preserved.

Applications: This visual specialist shines in tasks like recognizing faces in your photo gallery, helping doctors spot diseases in medical scans, enabling self-driving cars to identify pedestrians and traffic signs, and even helping apps tell the difference between a cat and a dog in pictures.

4. Recurrent Neural Network (RNN)

Overview: It remembers what it just processed and uses that information to better understand what comes next. It's like having a conversation where you need to remember what was said earlier to make sense of what's being said now.

Key Feature: The magic happens through feedback loops - the network can look back at its previous thoughts and use them to inform its current decisions.

Applications: This memory feature makes it invaluable for anything involving sequences or time-based patterns. You'll find it powering voice-to-text systems, helping generate human-like text, and analyzing trends in financial markets over time.

Limitation: Just like how you might forget the beginning of a really long story, these networks sometimes struggle to remember important details from way back in long sequences.

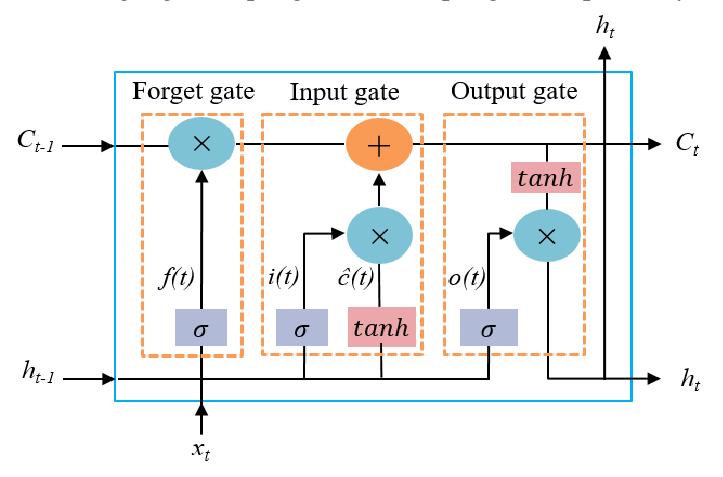

5. Long Short-Term Memory (LSTM)

Overview: This is the RNN's evolved form that solved the forgetfulness problem. It's like having a really smart assistant who knows exactly what to remember and what to forget, keeping important information available for as long as needed.

Key Feature: It has special memory cells that act like smart storage units, deciding what information is worth keeping around and what can be safely discarded.

Applications: This selective memory makes it perfect for complex tasks like translating entire sentences from one language to another, building chatbots that can maintain meaningful conversations, and analyzing long-term patterns in stock market data.

Each of these neural network types has found its perfect role in making our daily lives easier and more connected. Whether it's the visual expert helping organize your photos or the memory keeper powering your favorite translation app, these different "brains" work behind the scenes to make technology more intuitive and helpful.

Types of Learning Methods Used in Neural Networks

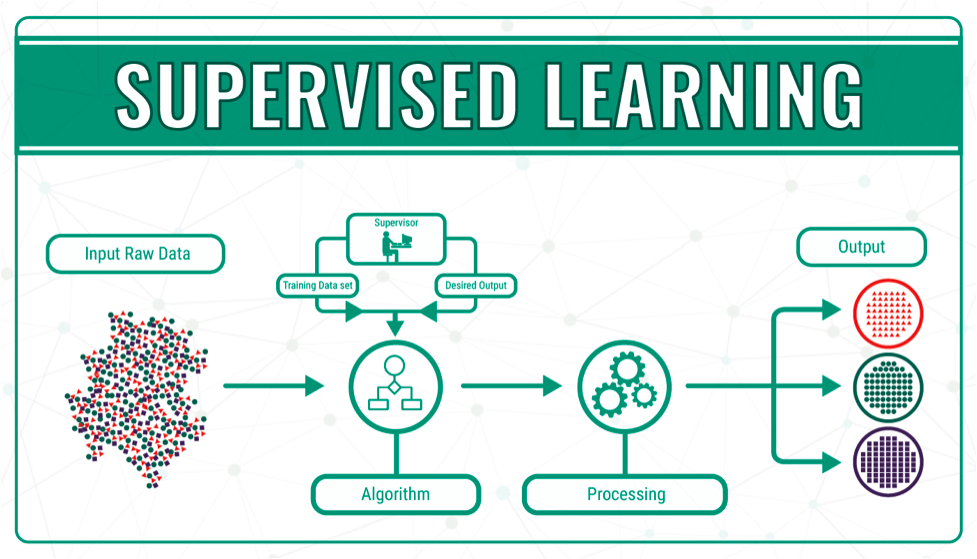

Supervised Learning

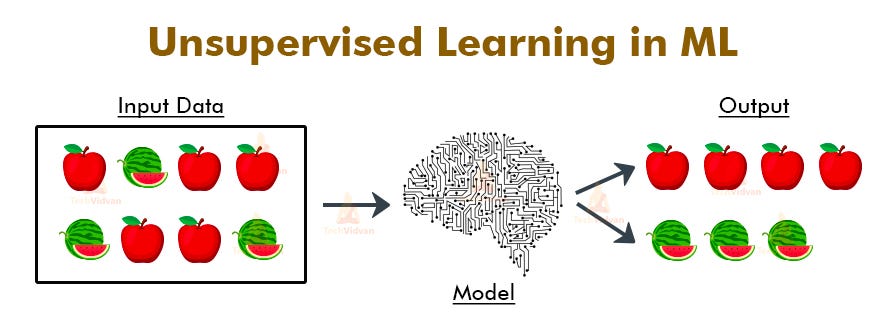

Unsupervised Learning

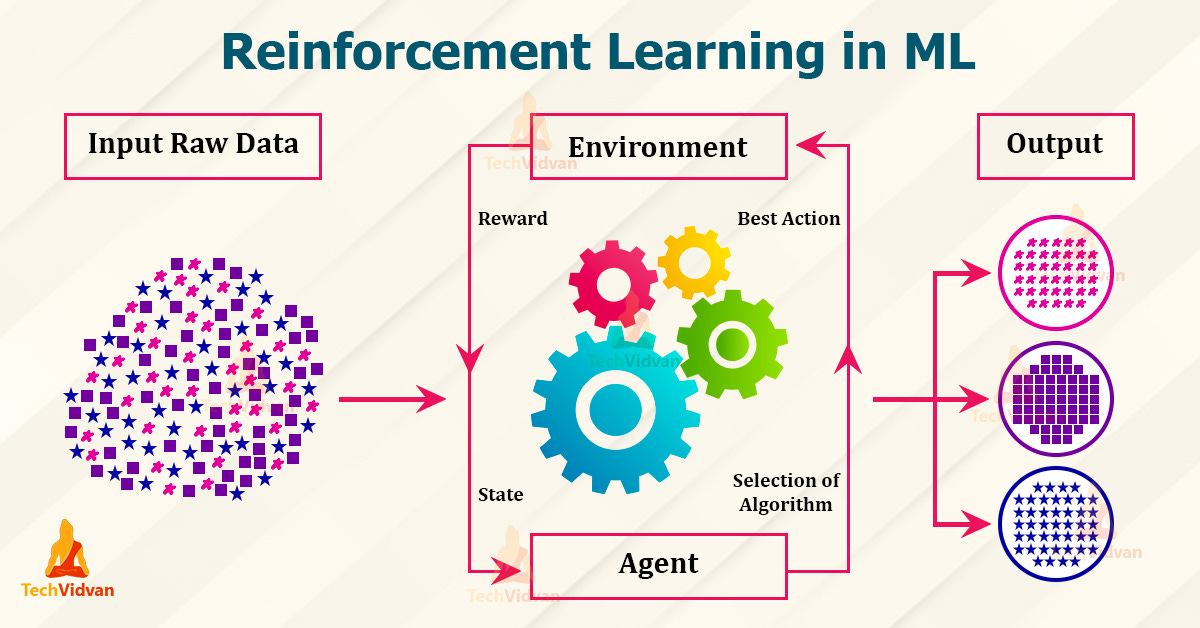

Reinforcement Learning

Supervised Learning: Just like the name says, this is learning with someone guiding you every step of the way. Think of it like learning to drive with an instructor sitting next to you, telling you exactly what to do and correcting your mistakes.

You show the neural network tons of examples with the right answers already provided. For instance, you might show it thousands of photos labeled "cat" or "dog." The network learns by comparing its guesses to the correct answers, adjusting itself each time it gets something wrong. Eventually, it becomes good enough to make accurate predictions on new, unseen data.

Unsupervised Learning: This is the opposite - no teacher, no right answers provided. It's like being dropped in a foreign country and having to figure out the culture and language patterns by yourself.

The network looks at data and tries to find hidden patterns or group similar things together. For example, if you give it customer data, it might discover that certain customers tend to buy similar products, even though you never told it to look for that pattern. It creates its own categories and rules based on what it observes.

Reinforcement Learning: This combines the best of both approaches. Instead of getting exact answers like supervised learning or no guidance like unsupervised learning, you get feedback that tells you how well you're doing.

Think of it like learning to play a video game. You don't get told exactly which button to press, but you do get a score that goes up or down based on your actions. The network learns by trying different approaches and paying attention to what works and what doesn't.

What are Neural Networks Used For?

Neural networks find applications across various domains for:

Recognizing things around us: They help your phone recognize your face, let cars see pedestrians, and enable voice assistants to understand what you're saying.

Understanding human language: They power chatbots, translate languages, analyze whether a review is positive or negative, and even generate human-like text.

Healthcare: They help doctors spot diseases in X-rays and scans, predict how patients might respond to treatments, and discover new medicines.

Money and business: They predict stock market trends, catch credit card fraud, assess loan risks, and make split-second trading decisions.

Personal recommendations: They figure out what movies you might like on streaming services, suggest products you might want to buy, and curate your social media feed.

Robotics and self-driving cars: They process information from cameras and sensors to help machines navigate and make decisions in real-time.

Entertainment: They create smarter video game characters, generate realistic graphics, and build immersive virtual worlds.

Manufacturing: They monitor production lines, predict when machines need maintenance, and ensure quality control.

Scientific research: They help scientists analyze complex data, simulate natural phenomena, and make new discoveries across different fields.

Creative work: They compose music, create artwork, and generate other forms of creative content.

Real-World Examples of Neural Networks:

Netflix - Movie and show recommendations based on your viewing history.

Spotify - Discovers new music you'll love through Daily Mix and Discover Weekly.

YouTube - Suggests videos and creates your personalized homepage feed.

Amazon - Product recommendations and "People who bought this also bought".

Flipkart - Personalized product suggestions and price predictions.

Zomato - Restaurant recommendations and food delivery time estimates.

Uber - Predicts ride demand and calculates optimal pricing.

Ola - Route optimization and driver-rider matching.

Google Maps - Traffic prediction and fastest route suggestions.

Conclusion

Neural networks have come a long way from their humble beginnings in the 1940s to becoming the invisible force behind many of today's most impressive technologies. What started as a simple attempt to mimic how our brains work has evolved into sophisticated systems that can see, understand language, and even make creative decisions.

The beauty of neural networks lies in their versatility. Whether it's helping doctors diagnose diseases faster, making our daily commutes safer through autonomous vehicles, or simply making our phones understand what we're saying, these systems have quietly woven themselves into the fabric of modern life.

As we've seen, different types of neural networks excel at different tasks - CNNs for visual recognition, RNNs for understanding sequences, and LSTMs for remembering important information over time. Each has found its perfect niche in solving real-world problems.

The journey from basic perceptrons to today's deep learning networks shows us how far we've come, but it also hints at the exciting possibilities ahead. As these systems continue to evolve, they'll likely become even more integrated into our daily experiences, making technology more intuitive and helpful.

Understanding neural networks isn't just about grasping a technical concept - it's about appreciating the remarkable ways we're teaching machines to think, learn, and adapt. And in many ways, this is just the beginning of what's possible when we combine human creativity with artificial intelligence.

The future of neural networks is bright, and we're all part of this incredible journey of discovery and innovation.

Great post! Clear and very well structured.

What is a Neural Link?