What is RAG (Retrieval Augmented Generation)?

Why Retrieval-Augmented Generation is AI's biggest breakthrough?

What if I told you there’s an AI technique that can think and remember at the same time like a Google search that writes flawless answers for you on the spot?

Meet Retrieval-Augmented Generation (RAG) the secret behind smarter chatbots, sharper virtual assistants, and next-gen AI tools you’re probably already using without even knowing. In this blog, we’ll break down what RAG really is, how it works, and why it might just change the way we interact with AI forever.

What Is RAG?

Retrieval-Augmented Generation (RAG) is like giving AI a cheat sheet. Instead of relying only on what it already knows, it pulls fresh, relevant information from external sources and then creates a clear, context-rich response. Think of it as combining GPT-4’s creativity with a powerful search engine resulting in smarter, more accurate answers.

While LLMs are incredibly smart, they aren’t perfect:

Limited knowledge: They only know what they were trained on, which means some answers can be outdated or missing domain-specific details.

Hallucinations: Sometimes, they sound confident but end up being completely wrong.

Generic responses: Without external data, answers can feel vague or lack depth.

That’s where RAG comes in. It connects these models to live, domain-specific data, whether from databases, documentation, or APIs, so responses are accurate, detailed, and up-to-date.

What is Retrieval?

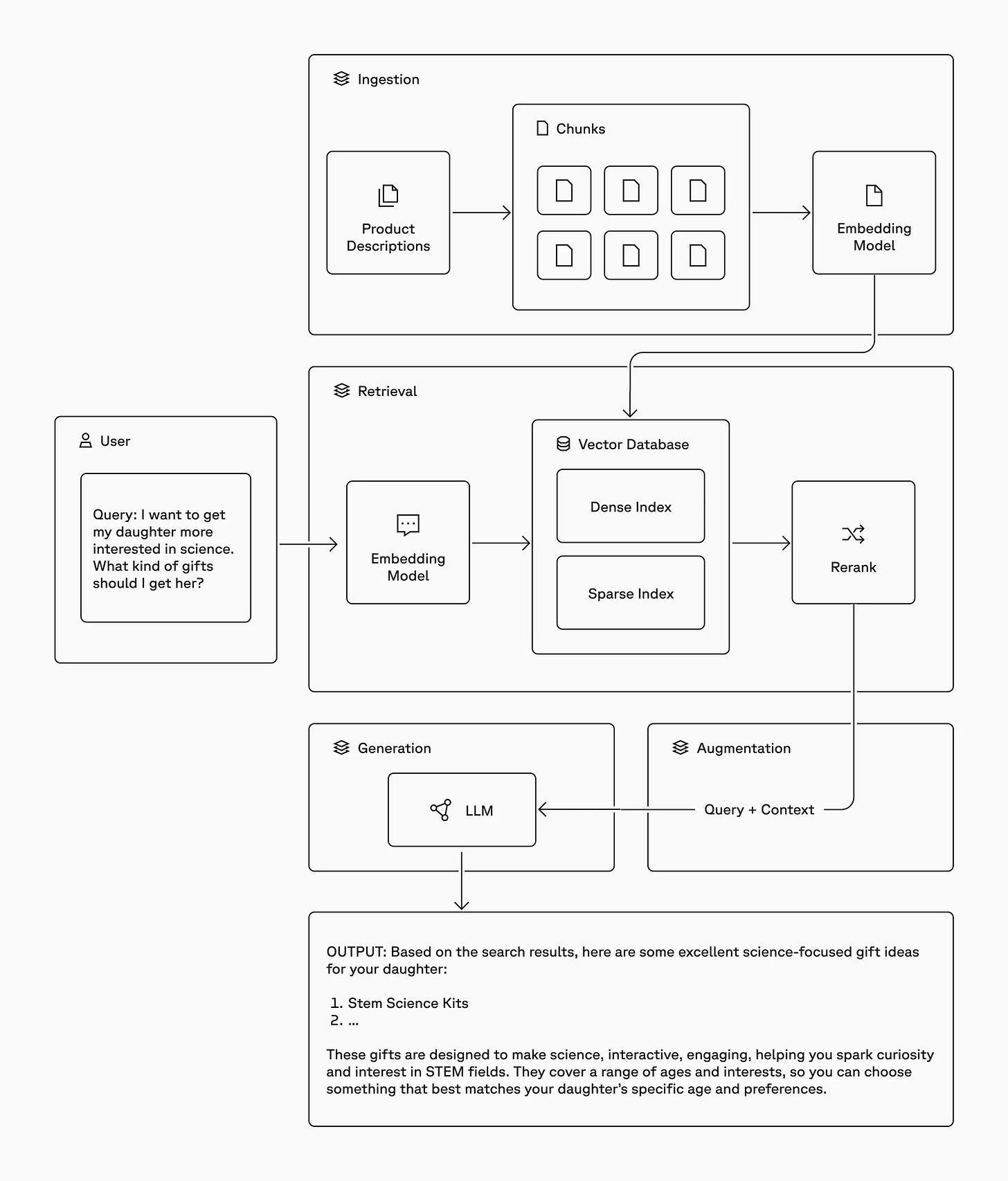

A basic way to fetch information is through semantic search it finds results based on meaning rather than exact words. But there’s an even smarter way: hybrid search.

Hybrid search blends semantic search (using dense vectors for meaning) with lexical search (using sparse vectors for exact keywords). This is super handy when users use different terms to describe the same thing or rely on unique internal jargon like acronyms, product names, or team names.

Here’s how it works: we turn the user’s query into a vector embedding and search through the database. With hybrid search, we check both dense and sparse indexes (or a single hybrid index), combine and de-duplicate the results, then use a reranking model to score and return the most relevant matches.

Augmentation

Once you’ve got the most relevant matches from the retrieval step, it’s time to work some magic by creating an augmented prompt.

This prompt blends the user’s question with the search results you just fetched, giving the LLM both context and facts to work with. The result? Responses that are sharper, richer, and way more accurate.

Here’s what an augmented prompt could look like:

QUESTION:

<the user's question>

CONTEXT:

<the search results to use as context>

Using the CONTEXT provided, answer the QUESTION. Keep your answer grounded in the facts of the CONTEXT. If the CONTEXT doesn't contain the answer to the QUESTION, say you don't know.When you give the LLM both the search results and the user’s question as context, it’s like handing it a cheat sheet before an exam. The model now has the most accurate and relevant information at its fingertips, helping it craft responses that are precise, reliable, and far less likely to go off track.

Generation

With the augmented prompt in place, the LLM now has access to the most relevant and reliable facts from your vector database. This means your application can deliver accurate, grounded answers while dramatically reducing the chances of hallucinations.

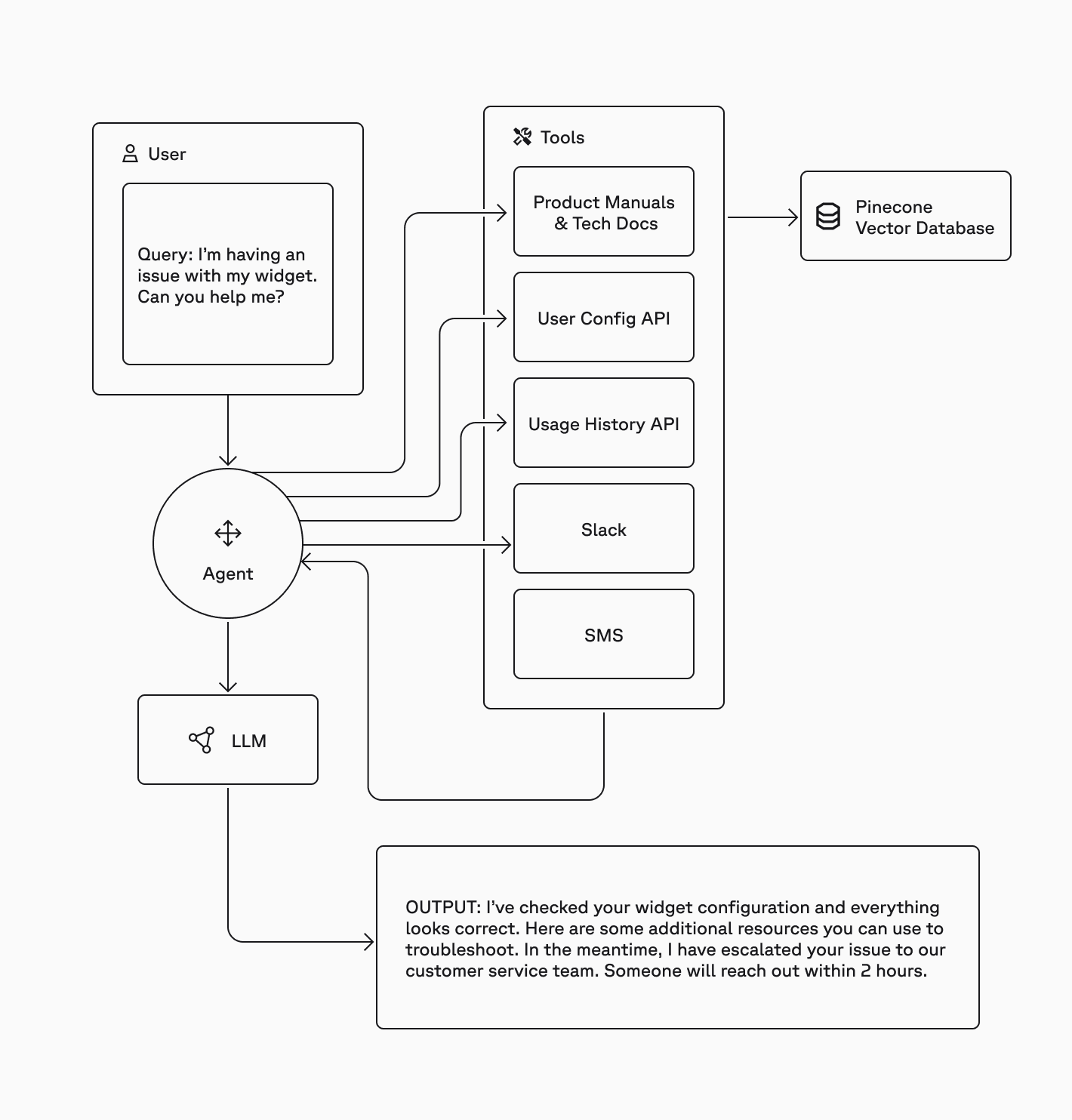

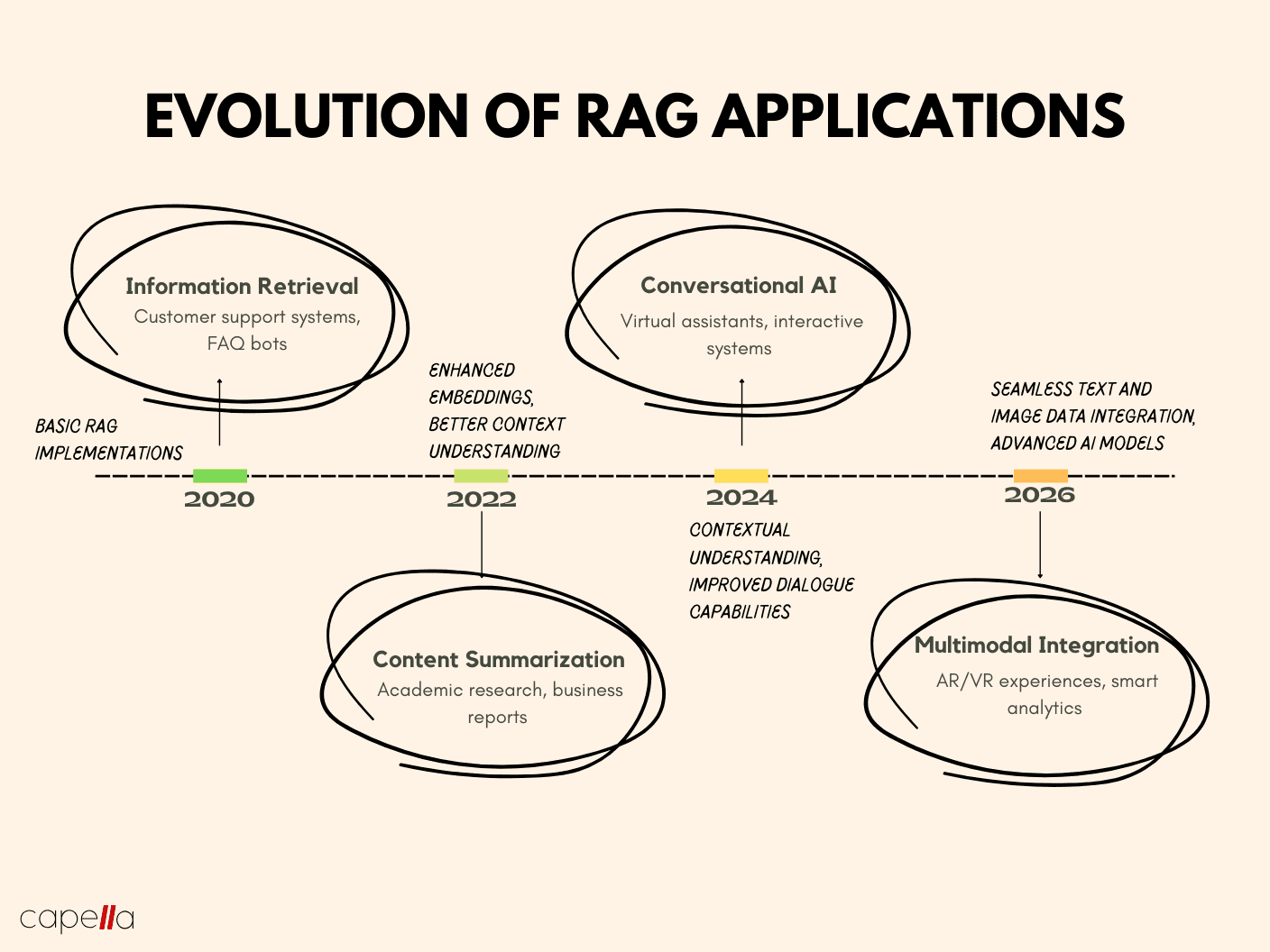

But RAG has evolved. It’s no longer just about finding the right piece of information. Agentic RAG takes it a step further deciding which questions to ask, which tools to use, when to use them, and then combining the results to deliver even smarter, well-grounded answers.

In this basic setup, the LLM itself acts as the agent it decides which retrieval tools to use, when to use them, and how to frame the queries. Essentially, it’s not just answering questions; it’s orchestrating the entire process.

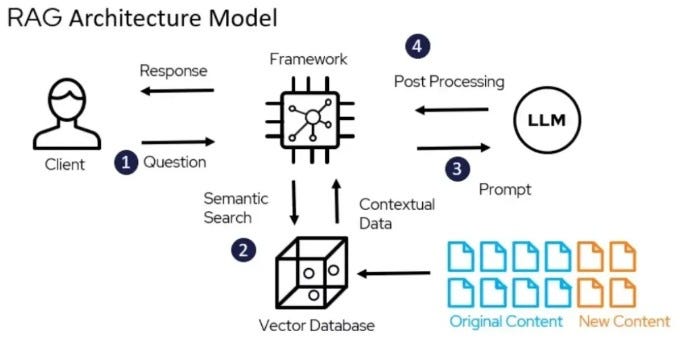

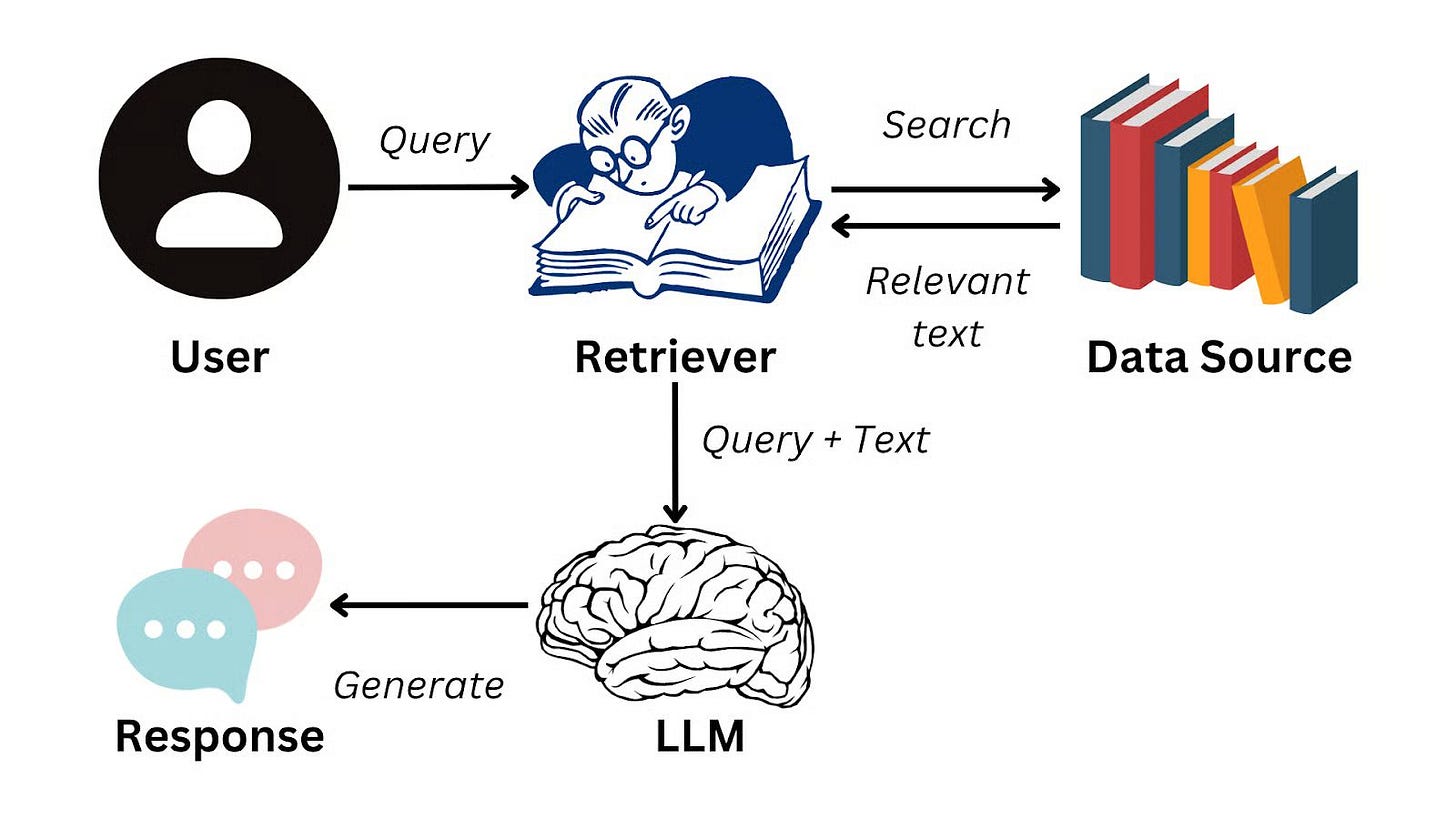

How Does RAG Work?

Now that you’ve got a clear picture of what RAG is, let’s break it down into simple, actionable steps to set up your own RAG framework:

Step 1: Data Collection

The first step is gathering all the data your application needs. For example, if you’re building a customer support chatbot for an electronics company, you’ll want to pull in user manuals, product databases, and a list of FAQs basically everything your bot needs to answer questions like a pro.

Step 2: Data Chunking

Data chunking is all about breaking big, heavy data into smaller, bite-sized pieces. For example, if you have a 100-page user manual, you can split it into focused sections each capable of answering a specific customer question.

This approach keeps every chunk laser-focused on one topic, making retrieval faster and more accurate. Instead of scanning through an entire document, the system quickly grabs just the pieces that matter, saving time and reducing irrelevant results.

Step 3: Document Embeddings

Once your data is chunked, the next step is to turn it into vector representations basically, converting text into numbers (embeddings) that capture its meaning.

Why does this matter? Because embeddings let the system understand what a query means, not just what words it uses. So instead of matching keywords word-for-word, it matches based on context ensuring responses are relevant and on point. Want to dive deeper? Check out our blog on the OpenAI API to see how it works and how you can use it to power smarter AI applications.

Step 4: Handling User Queries

When a user sends a query, it also needs to be converted into an embedding a numeric representation of its meaning. To keep things consistent, the same model used for document embeddings must be used for query embeddings too.

Once that’s done, the system compares the query embedding with all the document embeddings, measuring how close they are using techniques like cosine similarity or Euclidean distance. The chunks with the highest similarity scores are then picked as the most relevant answers for the user’s question.

Step 5: Generating Responses with an LLM

The retrieved text chunks, along with the user’s original query, are then passed into a language model. Using this context, the model crafts a clear and accurate response, which is delivered back to the user through the chat interface.

Here’s a simple flowchart to show how the entire RAG process works:

To make all these steps work smoothly, you can use a data framework like LlamaIndex.

LlamaIndex helps you easily connect external data sources with language models like GPT‑3, managing the entire flow of information efficiently. It’s a handy way to build your own LLM-powered applications without getting lost in complex integrations.

Why Use RAG to Improve LLMs? An Example

To really understand how RAG works, let’s look at a real-world scenario many businesses face.

Imagine you’re an executive at an electronics company that sells smartphones and laptops. You want to build a customer support chatbot that can instantly answer questions about product specs, troubleshooting, warranty details, and more.

Naturally, you think of using powerful LLMs like GPT‑3 or GPT‑4 to make this happen. But here’s the catch Large Language Models have certain limitations that can hurt the customer experience:

Lack of specific Information

The problem with standard language models is that they often give generic answers based only on their training data. So, if a customer asks about your specific software or needs detailed troubleshooting help, a traditional LLM might miss the mark.

Why? Because it hasn’t been trained on your company’s unique data. On top of that, most models have a training cutoff date, which means they can’t provide the most up‑to‑date information.

Hallucinations

Another challenge with LLMs is hallucination, they sometimes make up answers and present them as facts, sounding confident even when they’re wrong. And if the model doesn’t have a clear answer, it might give responses that are completely off-topic, leaving users frustrated and hurting the overall customer experience.

Generic Responses

Standard language models often give one‑size‑fits‑all answers that don’t consider specific contexts or individual user needs. That’s a problem for customer support, where personalization is key to a great experience.

This is where RAG comes in. It combines the broad knowledge of LLMs with your own data sources like product databases and user manuals to deliver responses that are accurate, reliable, and tailored to your business. In short, it gives your AI the context it needs to truly understand your customers.

Applications of Retrieval Augmented Generation (RAG)

RAG isn’t limited to one use case it’s transforming AI across multiple industries and scenarios. Here are some key areas where it shines:

Chatbots and AI Assistants: RAG-powered systems excel in question-answering scenarios, providing context-aware and detailed answers from extensive knowledge bases. These systems enable more informative and engaging interactions with users.

Education Tools: RAG can significantly improve educational tools by offering students access to answers, explanations, and additional context based on textbooks and reference materials. This facilitates more effective learning and comprehension.

Legal Research and Document Review: Legal professionals can leverage RAG models to streamline document review processes and conduct efficient legal research. RAG assists in summarizing statutes, case law, and other legal documents, saving time and improving accuracy.

Medical Diagnosis and Healthcare: In the healthcare domain, RAG models serve as valuable tools for doctors and medical professionals. Moreover, they provide access to the latest medical literature and clinical guidelines, thereby aiding in accurate diagnosis and treatment recommendations.

Language Translation with Context: RAG enhances language translation tasks by considering the context in knowledge bases. This approach results in more accurate translations, accounting for specific terminology and domain knowledge, which is particularly valuable in technical or specialized fields.

These applications highlight how RAG’s integration of external knowledge sources empowers AI systems to excel in various domains, providing context-aware, accurate, and valuable insights and responses.

Conclusion

After diving deep into RAG, one thing is clear: this is a genuine game-changer for AI.

RAG solves one of AI’s biggest flaws sounding smart but sometimes being wrong by letting models actually look things up before answering. And that changes everything.

Instead of polished but unreliable answers, we now have systems pulling from real, up-to-date data. Think of customer support bots that know your product details, or medical assistants referencing the latest research this is AI that’s not just clever but truly useful.

The rise of agentic RAG takes it even further. These are systems that can decide what to ask, where to look, and how to combine answers getting us closer to AI that genuinely understands context. For businesses, that means tailored, reliable responses, not generic replies.

RAG isn’t just another upgrade it’s the missing link between AI’s incredible language skills and real-world reliability. It’s what’s turning AI from an exciting experiment into a trustworthy everyday tool.

The future of AI isn’t just smart. It’s informed.