What is SLM ? (Small Language Model)

Understanding Small Language Models vs. Large Language Models in 2025.

In the modern business landscape, artificial intelligence has become essential for every organization. While the AI revolution initially captivated the world with LLMs, a fresh concept is now capturing attention in the headlines: "SLM". What exactly are these technologies, and what sets them apart from one another?

Before diving into these specifics, we need to first grasp what constitutes a language model. These systems are built to understand, create, and execute language tasks similar to humans, after being trained on massive datasets. Yet not every language model is identical – they vary in scale, ranging from large to small versions, each possessing distinct advantages and limitations designed for specific needs.

This Blog will explore Small Language Models alongside Large Language Models, examining their functions, core distinctions, and practical uses. We'll break down the SLM vs LLM comparison in simple terms.

What are Small Language Models?

Small Language Models, or SLMs, represent natural language processing systems built with streamlined designs that demand minimal computing resources and memory usage. Generally, SLMs contain anywhere from millions to several billion parameters, while their larger counterparts feature hundreds of billions of parameters. These parameters serve as internal settings acquired through the training process, helping the model handle activities such as comprehending, producing, or categorizing human communication.

Small language models represent streamlined, resource-friendly alternatives to the enormous large language models that have gained widespread attention. While systems like GPT-4o operate with hundreds of billions of parameters, SLMs work with significantly fewer components, usually ranging from millions to several billion.

The key characteristics of SLMs are:

Efficiency: SLMs operate without requiring the enormous processing capabilities that LLMs need. This advantage makes them ideal for deployment on resource-constrained devices such as mobile phones, tablets, or internet-connected gadgets used in edge computing scenarios.

Accessibility: Organizations working within budget constraints can deploy SLMs without investing in expensive, high-performance hardware. They work particularly well for local installations where maintaining data privacy and security takes priority, since they can function independently of cloud-based systems.

Customization: SLMs offer straightforward customization options. Their compact design allows them to quickly adjust to specialized tasks and particular industry requirements. This flexibility makes them excellent choices for targeted uses in areas like customer service, medical applications, or educational technology.

Faster inference: SLMs deliver faster response times due to their reduced parameter count. This speed advantage makes them perfectly suited for immediate-response applications such as conversational agents, digital assistants, or any platform requiring quick decision-making. Users experience minimal waiting periods, which proves valuable in situations demanding low response delays.

Why Small Language Models (SLMs)?

SLMs present an attractive solution by boosting capabilities while cutting expenses. They can achieve much of the effectiveness and output quality that comes with LLMs, but with lower costs and faster response times.

This progress stems from industry breakthroughs and techniques that enhance model effectiveness through different structural and training improvements, such as:

Knowledge distillation: This process involves teaching a compact "student" model to mirror the responses of a more extensive "teacher" model.

Fine-tuning: Using premium, specialized datasets to refine smaller models and boost their effectiveness for particular applications.

Quantization: Decreasing the accuracy of model weight measurements, such as converting from 16-bit to 4-bit precision, along with activation values to significantly enhance speed and lower expenses.

Model pruning and sparsity: Trimming a model's scope of information and output precision by eliminating unnecessary or minor weights to achieve better performance and cost savings.

Mixture of Experts (MoE): Employing several small specialized models that work together, where only a portion of the network activates at once, thereby reducing the overall parameter requirements.

Agent architecture: Merging smaller neural networks with logical reasoning elements to manage complicated analytical tasks.

Prompt tuning: Applying sophisticated information encoding methods to improve result quality while minimizing network parameters.

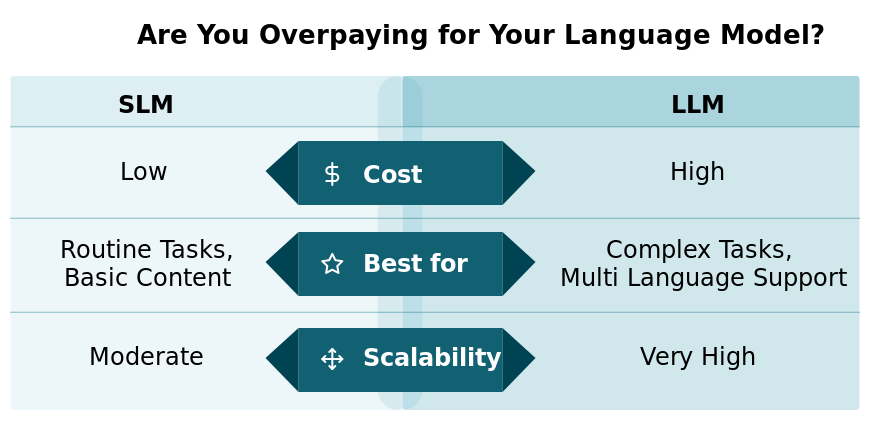

SLM vs. LLM in Detailed Explanation

Let’s explore a few of the more specific ways large language models differ from small language models, in the following side-by-side comparisons.

Model Scale & Strucutre

Large Language Models: These expansive systems undergo training on extensive and diverse information collections to provide superior comprehension and effectiveness for complicated operations. Their parameter counts span from billions to trillions of components.

Small Language Models: Because these compact systems are built for specialized applications, they receive training on focused, niche-specific information collections. Their parameter counts typically fall between millions and tens of millions of elements.

Architectural Differences

Large Language Models: Given their enormous parameter requirements, these systems need substantial processing capabilities, usually depending on graphics processing units or tensor processing units for both development and implementation. They also need comprehensive and varied information sources spanning multiple fields to achieve effective adaptation across different applications. To identify complex data relationships, LLMs use deep transformer structures or other advanced neural network designs.

Small Language Models: In contrast to LLMs, SLMs operate with reduced parameters, typically ranging from several million to several hundred million components. Their compact nature makes them more portable and less demanding in terms of processing power compared to LLMs. Their framework is less complex and more streamlined. The training information sources are more limited or targeted, customized for particular applications or specialized areas.

Performance and Capabilities

Large Language Models: LLMs can achieve excellent results on demanding applications including sophisticated text creation, advanced question handling, and comprehensive content development. Their extensive training background and substantial parameter structure allow them to manage various applications including language conversion, content condensation, creative composition, and programming support.

Small Language Models: SLMs are optimized to excel in particular fields or applications where their training information proves most valuable. They often receive training on industry or organization-specific information collections. Since these systems are built for targeted business applications or specialized knowledge areas, they are quicker to develop and implement.

Fine-tuning

Large Language Models: These extensive systems need more substantial information collections compared to SLMs during customization.

Small Language Models: Unlike their larger counterparts, compact models undergo customization using more concentrated information sets.

Inference speed

Large Language Models: These extensive systems need multiple simultaneous processing components to produce results.

Small Language Models: Users can operate these models on personal computers while still producing results within reasonable timeframes.

Use-cases

Large language Models: These comprehensive systems aim to replicate human cognitive abilities across broad applications and perform exceptionally well in demanding tasks including content creation or advanced information analysis.

Small Language Models: Compact models work best for applications requiring rapid responses and reduced processing demands, including basic customer support systems or straightforward information retrieval.

For example, a specialized Small Language Model can be created for the medical field and customized with healthcare vocabulary, treatment procedures, and patient care guidelines. Training on medical publications, anonymized health records, and healthcare-focused materials allows these models to produce highly precise and contextually appropriate results.

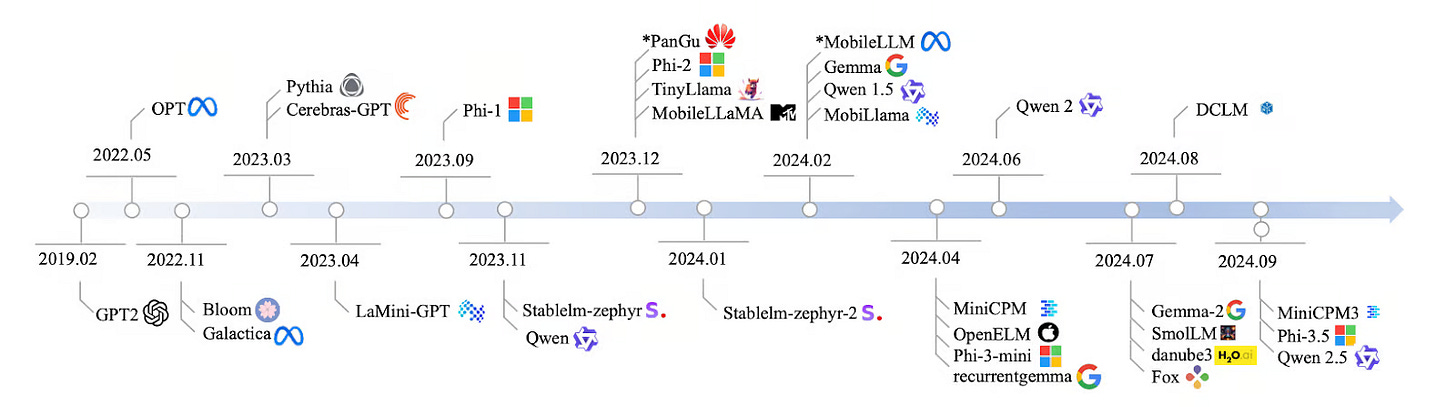

Top 10 Small Language Models for 2025

Small language models, or SLMs, represent compact and efficient systems that operate without requiring enormous server infrastructure, setting them apart from their large language model counterparts. These systems are engineered for quick processing and immediate performance, making them capable of running directly on our mobile devices, tablets, or wearable technology.

1. o3-mini

Released on January 31, 2025, o3-mini is a revolutionary small language model that has made significant strides in the field of artificial intelligence. Despite its smaller size, o3-mini demonstrates strong performance, outperforming even larger models like the full o1 on most benchmarks. As part of OpenAI's reasoning model series, o3-mini supports text inputs and outputs, and offers adjustable reasoning levels (low, medium, high).

Parameters: Compact design with reduced parameter count.

Access: ChatGPT (https://chat.openai.com), OpenAI API (https://platform.openai.com/docs/api-reference), Microsoft Azure OpenAI Service (https://azure.microsoft.com/en-us/products/ai-services/openai-service)

Open source: No, proprietary with three cost tiers.

2. Qwen2.5/Qwen3: 0.5B, 1B, and 7B

Qwen2.5 and the latest Qwen3 represent the evolution of this successful model family, with sizes that go from 0.5 billion to 7 billion parameters. The 0.5B version remains perfect for ultra-lightweight applications, while the 7B model delivers robust performance for complex tasks like summarization and advanced text generation. These models continue to lead in practical applications where speed and efficiency are crucial.

Parameters: 0.5 billion, 1 billion, and 7 billion versions

Access: Hugging Face (https://huggingface.co/Qwen), Qwen Official (https://qwenlm.github.io/)

Open source: Yes, with open-source license.

3. Gemma 3

Released by Google on March 12, 2025, Gemma 3 comes with both text-only and multimodal capabilities , representing a significant advancement over previous Gemma versions. Available in multiple parameter sizes from 125M to 1.2B, it's designed for efficient natural language understanding and generation with optimized transformer architecture for edge deployment.

Parameters: 125 million to 1.2 billion versions

Access: Hugging Face (https://huggingface.co/google/gemma-2b), Google AI Studio (https://aistudio.google.com/)

Open source: Yes, with a permissive license.

4. Mistral Small 3

The Mistral Small 3 model is a cutting-edge language model designed to provide robust language and instruction-following capabilities. It is engineered to handle approximately 80% of generative AI tasks with a relatively modest hardware requirement. Despite its size, it can be deployed locally on a single Nvidia RTX 4090 or a Macbook with 32GB of RAM.

Parameters: 24 billion parameters

Access: Hugging Face (https://huggingface.co/mistralai/Mistral-Small-24B-Instruct-2501), Ollama (https://ollama.com/library/mistral-small), Kaggle (https://www.kaggle.com/models/mistral-ai/mistral-small-24b)

Open source: Yes, Apache 2.0 license.

5. Phi-4

Phi-4 is a 14-billion parameter language model developed by Microsoft Research. It is designed to excel in reasoning tasks while maintaining computational efficiency. Unlike many larger models, Phi-4 aims to strike a balance between capability and resource efficiency, making it a practical tool for real-world applications.

Parameters: 14 billion parameters

Access: Microsoft Azure AI (https://azure.microsoft.com/en-us/products/ai-services), Hugging Face (https://huggingface.co/microsoft/Phi-4)

Open source: No, proprietary model.

6. Llama 3.2: 1.3B and 13B

Llama 3.2 is a compact yet powerful language model designed to cater to various natural language processing tasks while maintaining efficiency and adaptability. These configurations typically range from a lightweight version with 1.3 billion parameters for mobile and edge deployments to a more robust version with 13 billion parameters for server-side applications.

Parameters: 1.3 billion and 13 billion versions

Access: Hugging Face (https://huggingface.co/meta-llama/Llama-3.2-1B), Meta AI ( https://llama.meta.com/ )

Open source: Yes, with custom license

7. Mistral Nemo: 1.3B, 7B, and 13B

Mistral Nemo is a compact and efficient language model focused on delivering high-quality language understanding and generation capabilities while maintaining scalability and speed. The model comes in sizes including 1.3 billion, 7 billion, and 13 billion parameters, allowing users to balance computational resource requirements with model complexity and performance.

Parameters: 1.3 billion, 7 billion, and 13 billion versions

Access: Hugging Face (https://huggingface.co/mistralai/Mistral-Nemo-Instruct-2407), Mistral AI ( https://mistral.ai/ )

Open source: Yes, open-source model.

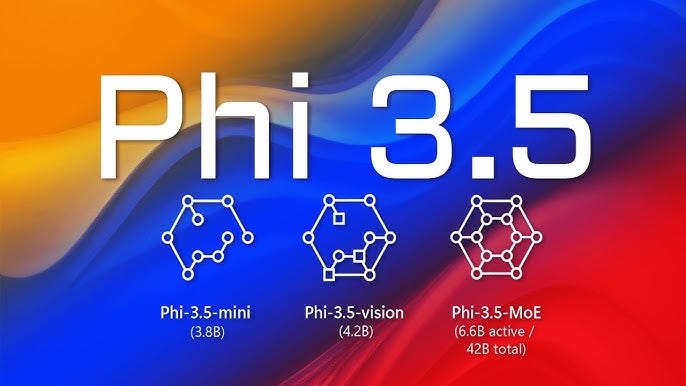

8. Phi-3.5 Mini: 1.3B and 3B

Microsoft Phi 3.5 Mini is a compact version of the Phi language model series developed by Microsoft. The smallest variant contains 1.3 billion parameters, offering lightweight deployment capabilities. Larger configurations, such as the 3 billion-parameter version, are available for applications demanding higher accuracy.

Parameters: 1.3 billion and 3 billion versions

Access: Microsoft Azure AI (https://azure.microsoft.com/en-us/products/ai-services), Hugging Face (https://huggingface.co/microsoft/Phi-3.5-mini-instruct)

Open source: No, proprietary with free tier available.

9. SmolLM: 10M to 300M

SmolLM is a lightweight language model designed to provide efficient natural language processing capabilities while maintaining a reduced computational footprint. SmolLM is available in multiple configurations including models with 10 million parameters, 50 million and 100 million parameters, and a 300-million-parameter variant.

Parameters: 10 million to 300 million versions

Access: Hugging Face (https://huggingface.co/HuggingFaceTB/SmolLM-135M), SmolLM GitHub (https://github.com/huggingface/smollm)

Open source: Yes, permissive license with free tier

10. DistilBERT

DistilBERT is a smaller, faster, and lighter version of BERT that reduces the size by 40% while retaining 97% of its language understanding capabilities. The standard version has approximately 66 million parameters compared to BERT-base's 110 million. While older, it remains highly relevant for NLU tasks requiring efficiency.

Parameters: 66 million parameters.

Access: Hugging Face (https://huggingface.co/distilbert-base-uncased), Transformers Library (https://huggingface.co/docs/transformers/model_doc/distilbert)

Open source: Yes, freely available.

Conclusion

Think of it this way - you don't need a Ferrari to go grocery shopping. That's what small language models are all about.

Big AI models like ChatGPT are powerful but heavy and expensive to run. Small language models are like the efficient compact cars of AI - they're faster, cheaper, work on your phone, and don't need constant internet connection.

The cool part? They're getting really good at specific jobs. Need a customer service bot or quick answers? There's probably a small model that can do it without costing a fortune.

In 2025, we have great options from tiny 10-million parameter models to larger 24-billion ones. They're all way smaller than the giants but still get the job done.

Bottom line: You don't always need the biggest, most expensive AI. Sometimes the smaller, smarter choice works better for what you actually need. It's about picking the right tool for the job - and small language models are proving to be perfect for most everyday tasks.